Are experts useless?

(I’m trying to get back into the habit of posting once a week or more, so do forgive me if the articles seem a little rusty or unoriginal while I get back into the swing of things!)

There are good reasons to be sceptical of experts. In Phillip Tetlock’s book Expert Political Judgement, he shows that lots of “experts” basically just don’t know what they’re talking about. In his famous prediction tournaments, Tetlock encouraged experts to make forecasts about various events (e.g. ‘Will the Soviet Union collapse by 1990?’), and showed that they didn’t do particularly well at all. As Tetlock shows in his book Superforecasters, experts were trounced by ordinary people who put lots of effort into making accurate predictions.

‘Not particularly well at all’ is being generous, in fact. The thing people often say at this point is that the experts did as well as the proverbial dart-throwing monkey, although Tetlock apparently isn’t the biggest fan of this interpretation of the data. Perhaps they did ever so slightly better than the average chimp? At the very least, I suspect chimps and academics deserve to play in the same league.

And then how about the actual journals these experts publish in? Well, recently we’ve had a load of seemingly reputable scientists (or if not seemingly reputable, at least very famous) just making stuff up and trying to sue people who point out that they’ve made stuff up. Here’s a summary of the story from Experimental History, in case you missed it:

The bloggers at Data Colada published a four-part series (1, 2, 3, 4) alleging fraud in papers co-authored by Harvard Business School professor Francesca Gino. She responded by suing both them and Harvard for $25 million.

Earlier, the Colada boys had found evidence of fraud in a paper co-authored by Duke professor Dan Ariely. The real juicy bit? There’s a paper written by both Ariely and Gino in which they might have independently faked the data for two separate studies in the same article. Oh, and the paper is about dishonesty.

The ways in which Gino and Ariely faked their data is so blatant that it should scare us: if they were a little more competent, maybe they would’ve gotten away with it.

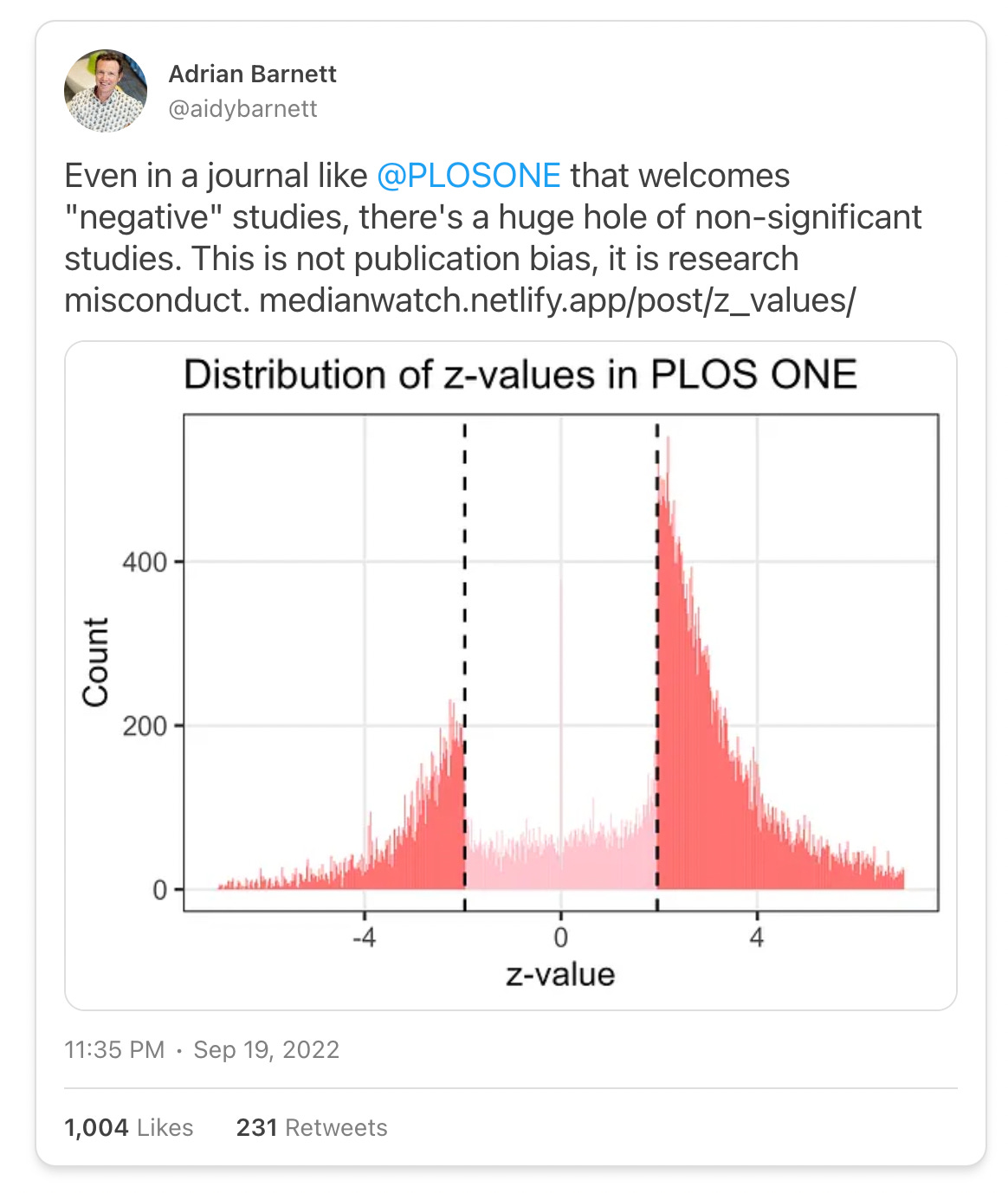

But even if we forget about fraud, science is in a bit of a state. Publication bias, where studies that are interesting and/or statistically significant are also more likely to be published, means that it can be hard to trust published studies even if they did everything right. Take a look at this tweet:

The dotted lines show the thresholds at which studies become statistically significant. The sparsely populated middle zone and the huge spike of studies in the darker red zone shows both that many more statistically significant studies are published than those that don’t reach significance, and that there seems to be quite a lot of fiddling going on to bring your p-value just below 0.05.

Some fields are worse than others. In 2015, a load of psychologists got to work on replicating 100 psychology studies, and found a couple of things. The first thing they found was that the average effect size in the replication studies was about half of the average effect size in the original studies. The second thing they found was that only 36% of replications had significant results (!), compared to 97% of the original studies.

Another thing to consider is that, even among studies that do replicate, we can’t really be sure they’re proving what they claim to be proving. A study might claim that X causes Y, when in fact it doesn’t do much to prove there’s any causal connection at all. So even if the study does turn out to replicate, that doesn’t mean we should assume their causal identification strategy is any good.

What should we make of all this stuff? One possible take is: ‘expertise, especially in the social sciences, is all a load of bullshit. Their studies often don’t replicate, many of the studies that do replicate are still crap, and the experts carrying out the studies aren’t any good at predicting real life events’.

And yes, maybe. But there’s also a case for being a bit more optimistic. For one thing, it isn’t that hard to figure out which studies aren’t going to replicate. If you’re serious about looking at research and trying to guess whether it’s bogus, you can probably do a pretty good job with just a little bit of training.

The 80,000 Hours website has a pretty fun game where you can predict whether a psychology study replicated or whether it didn’t. (If you’re interested in playing it, do so now before I tell you how you can win). I hate to boast, but I think I did pretty well, getting the equivalent of 17.85 studies out of 21 studies correct.

But what was interesting to me is that it clearly wasn’t the case that I was putting in a ton of effort trying to figure out which studies were likely to replicate, it was just objectively easy. If you click on the ‘more details and stats from this paper’ button, and note that the p-value is fairly close to 0.05 (rather than significantly below 0.05), the study probably didn’t replicate. If the sample size is very small, you shouldn’t take it very seriously. If the original claim just sounds like obvious BS, downgrade it significantly. Following these rules alone should get you a respectable score.

In a much more comprehensive version of pretty much the same thing, Alvaro De Menard discusses how he made $10,000 forecasting which studies would replicate in a prediction market. The basic strategy was similar to mine, although he took many more factors about each paper into account. Here’s his list of factors he took into account, plus his notes:

p-value. Try to find the actual p-value, they are often not reported. Many papers will just give stars for <.05 and <.01, but sometimes <.01 means 0.0000001! There's a shocking number of papers that only report coefficients and asterisks—no SEs, no CIs, no t-stats.

Power. Ideally you'll do a proper power analysis, but I just eyeballed it.

Plausibility. This is the most subjective part of the judgment and it can make an enormous difference. Some broad guidelines:

People respond to incentives.

Good things tend to be correlated with good things and negatively correlated with bad things.

Subtle interventions do not have huge effects.

Pre-registration. Huge plus. Ideally you want to check if the plan was actually followed.

Interaction effect. They tend to be especially underpowered.

Other research on the same/similar questions, tests, scales, methodologies—this can be difficult for non-specialists, but the track record of a theory or methodology is important. Beware publication bias.

Methodology - RCT/RDD/DID good. IV depends, many are crap. Various natural-/quasi-experiments: some good, some bad (often hard to replicate). Lab experiments, neutral. Approaches that don't deal with causal identification depend heavily on prior plausibility.

Robustness checks: how does the claim hold up across specifications, samples, experiments, etc.

Signs of a fishing expedition/researcher degrees of freedom. If you see a gazillion potential outcome variables and that they picked the one that happened to have p<0.05, that's what we in the business call a "red flag". Look out for stuff like ad hoc quadratic terms.

Suspiciously transformed variables. Continuous variables put into arbitrary bins are a classic p-hacking technique.

General propensity for error/inconsistency in measurements. Fluffy variables or experiments involving wrangling 9 month old babies, for example.

Alvaro made a lot of money because his methods were good. And once he figured out the tricks for predicting which studies would replicate, he could do it pretty quickly: he needed around 140 seconds per paper to come up with a reasonably good prediction in his head, or he could manually input data about the paper into his model and get a result in about 210 seconds.

A study from 2018 convinced me that it’s actually often quite intuitive which papers are likely to be bogus. 233 people without a PhD were shown 27 different psychology studies, and predicted which would replicate with above-chance accuracy (59% success rate). When they were told a bit more about how to interpret the study in an added description, the success rate increased to 67%. That seems pretty good for a load of random people without PhDs, especially given that it’s not like they’d spent a huge amount of time trying to research how to tell when a study will replicate.

The fact that it isn’t that hard to tell which studies are likely to replicate doesn’t mean that the replication crisis isn’t a problem, but I do think it should move us away from total despair at the state of academia. Maybe a load of this stuff is bullshit, but at least it seems like the average reader of this blog can put in some time to figure out whether a specific study is likely to be bullshit.

I’m also quite optimistic about surveys of experts as a way of figuring out what’s actually likely to be true. If you want to know whether a policy is good for the economy, it seems much easier and more reliable to consult the IGM Expert panels than to conduct a miniature literature review.

Do non-bank financial intermediaries pose a substantial threat to financial stability? I have no idea. I don’t even really know what the questions means, and definitely don’t know enough about the topic to competently sift through the literature. But I can just look at the survey of experts and come to a quick conclusion about the likely answer:

What about the forecasting stuff? When I was at the Effective Altruism Global conference in 2022, I went to a talk by a guy who had served as some sort of higher-up in the US military and had spent much of his career focusing on reducing the risk of nuclear war. This being 2022, he started the conference by giving his prediction about whether the Russia-Ukraine conflict would lead to a nuclear exchange by the end of the year. I don’t remember the exact figure he gave, but I remember it being astoundingly high, to the extent where I figured that maybe he had no idea what he was talking about.

But as he went on, it became apparent that he absolutely did have an idea what he was talking about. He would talk convincingly about different possible outcomes, and was very reasonable in his responses about how the US should react in different circumstances. If he and a random superforecaster had to write a report on how likely nuclear war was, I would expect his report to be much more insightful, even if his actual numerical predictions were bad.

The ‘superforecasters vs. experts’ framing of Tetlock’s work might be the wrong way of thinking about it. Superforecasters rely on the work of experts to inform their predictions, and are better at aggregating different expert viewpoints and datasets to create predictions, but this doesn’t really lend itself to the ‘experts are totally useless’ view. It doesn’t seem that surprising that a load of people who love making predictions are better at making predictions than academics are.

There’s also some other interesting research by Misha and Gavin that suggests that maybe the gulf between forecasters and experts isn’t as large as most people think. Specifically, with regards to forecasters against the intelligence community, they write:

A common misconception is that superforecasters outperformed intelligence analysts by 30%. Instead: Goldstein et al showed that the Good Judgment Project's best-performing aggregation method outperformed the intelligence community, but this was partly due to the different aggregation technique used (the GJP weighting algorithm performs better than prediction markets, given the apparently low volumes of the ICPM market).

The forecaster prediction market performed about as well as the intelligence analyst prediction market; and in general, prediction pools outperform prediction markets in the current market regime (e.g. low subsidies, low volume, perverse incentives, narrow demographics). In the same study, the forecaster average was notably worse than the intelligence community.

I think my overall view here is that there’s a common take that social science experts and experts aren’t really very insightful at all. This is an overcorrection. Yes, incentives in academia are a bit shit. Yes, some experts (and especially well-known experts) are bullshitters. And yes, experts probably aren’t always amazing at making predictions related to their field of study. But you can still get a lot out of reading social science research, it isn’t that hard to figure out which studies are likely to be bullshit, and people who are extremely good at making predictions rely on expert opinion all the time.

Something that I think may be an effect is that in order for collective expertise to work, individual experts have to rely on their own subjective judgment calls. A naive layperson can probably beat the typical expert by taking the average views of all experts taken together (as their errors may cancel out), but when the typical expert adopts that as their rule, the field becomes degenerate.

Of course an expert can be heterodox in their public arguments and take the average in their pragmatic bets, and I suspect almost all experts do this to a degree, but I also expect they also entangle these to a degree.

I'm reminded of the famous "prediction" about the possible death toll of COVID in the US by some high-level epidemiologist in the British government, putting the number at over 2 million in the very early days of the outbreak (I think it's the one referenced here by Dr. Neil Ferguson? https://www.cato.org/blog/how-one-model-simulated-22-million-us-deaths-covid-19). The death toll as of now is 1.1 million (https://covid.cdc.gov/covid-data-tracker/#datatracker-home). This paper ended up driving a lot of policy decisions based on the credentials of the author, but I read the paper, and if I remember correctly there weren't any reference to confidence intervals, error bars, or anything. It seemed to be literally plugging early, unreliable numbers into a spreadsheet they had already and taking the number that came out at face-value without even considering much more. It bothered me much more than it being wrong that so few people in the establishment seemed to recognize how fake it looked from the start, like they didn't understand how to read data like this. Are epidemiologists actually trained in more statistics than plugging numbers into a formula?

I appreciate that, when it's is early in an unknown situation, it is good to know the worst-case, and this is unlikely to actually happen in the end, and the usefulness of models is often greater than the predictions they calculate, like knowing that the transmission rate of the disease dominates the early shape of the disease spread leads to actionable recommendations, like social distancing and masks. But when you then see this big publication of the nonsense numbers like "2 million people are going to die!" and prominent mathematicians on Twitter arguing about what the exponent is for exponential growth of the disease and no one stepping in to say, "guys, it's not exponential; it's sigmoidal. There's going to be an inflection point where the disease spread slows down, and until we hit that, we can't predict where it will be because it involves terms like, 'percentage of the population that will eventually get infected,' so maybe we should all calm down a bit, make the best decisions we can in the uncertainty, and save your 5-year, 3 decimal point predictions for your next novel."

All of which is probably why I'm not in charge, because people like to believe their leaders are super-humanly knowledgeable and in command of the situation. Until they find out the truth, then they get pissed and storm the Bastille. And better leadership would involve showing confidence on how to navigate uncertainty, which is harder. But it all speaks to me of a failure in the education of our upper-class and poor selection of second-generation, reverted-to-the-mean, hereditary elites that worries me a lot. At least in other systems of hereditary nobility there was supposed to be a senschal doing the actual management of a noble's property because they understood the noble was probably a fool.

Sorry to rant on someone else's comments section. This is a topic I think a lot about, too.