Extreme Honesty

Lots of the people I associate with (largely Effective Altruists, Rationalists, and forecasters) seem to be believers in something that I will call ‘extreme honesty’. This is where, rather than just writing things you believe to be true, you go to extreme lengths to ensure that people can verify that what you’re writing is likely to be true. For instance, rather than merely writing a piece about what you think is likely to happen in the war in Ukraine, you also make an explicit forecast about the chance of some verifiable/falsifiable outcome occurring. This allows people to check your track record, and get an understanding of whether what you’re saying is likely to be correct. As Benjamin Todd writes of a set of forecasts related to the war in Ukraine: “This is how the news should look”.

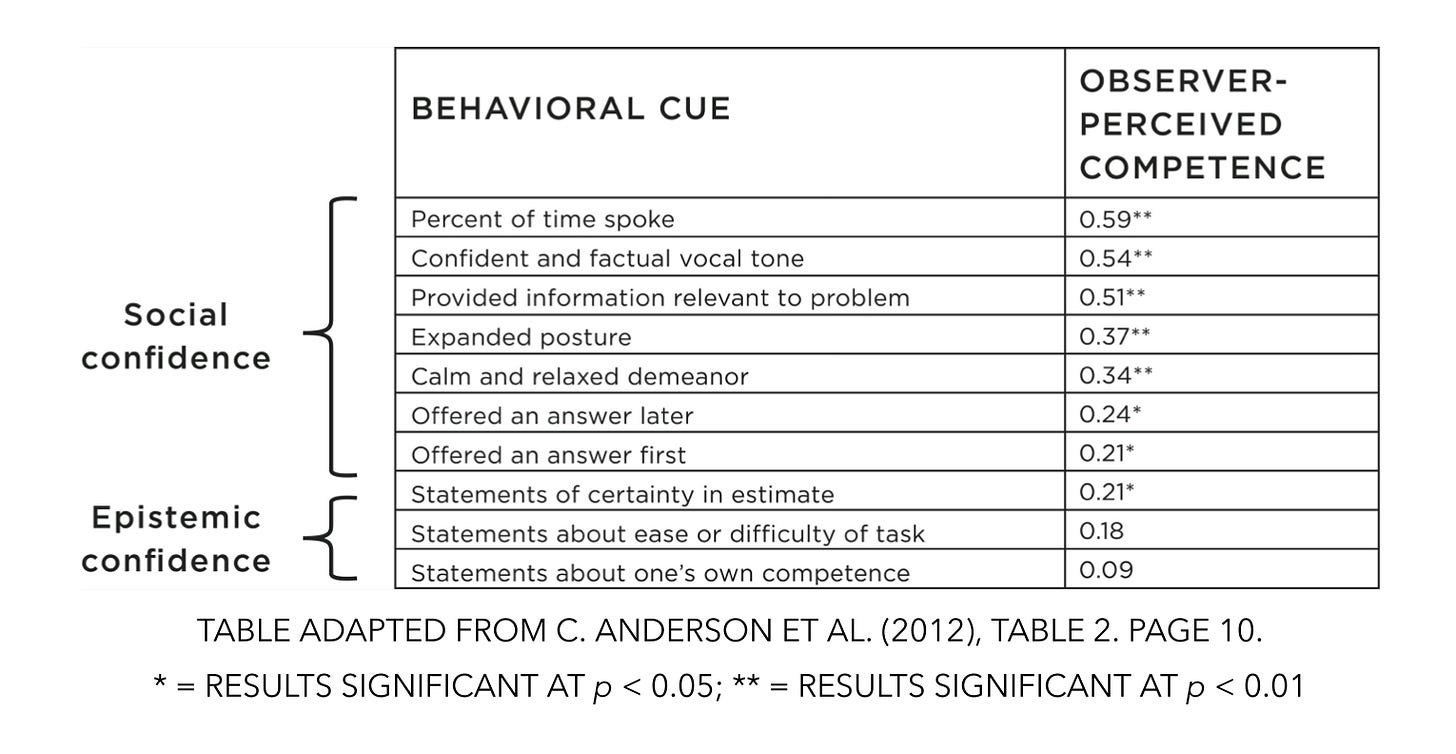

Another example is Julia Galef’s claims about ‘epistemic confidence’. Epistemic confidence refers to being certain about what is true - for instance, if someone says that there is a 99% chance that they will be the Prime Minister someday, they have an extremely high amount of epistemic confidence. Galef claims that many people express too much epistemic confidence in a bid to impress people, whereas what people are really impressed by is ‘social confidence’, which just refers to acting at ease in social situations, speaking in a tone that makes you seem secure in yourself, and so on. The implication of a lot of what Galef says is that it’s good to exude social confidence, but displaying too much epistemic confidence (e.g. saying ‘I’m almost certain my start-up will succeed!’) may be a breach of extreme honesty if the chance of your start-up actually succeeding is low, as it almost certainly will be.

I think the reason that extreme honesty has become a social norm for Effective Altruists (which is similar, although distinct, from what the people at Open Philanthropy refer to as ‘Reasoning Transparency’), is that a clear and honest evaluation of confidence levels seems hugely important when it comes to evaluating the cost-effectiveness of charities, which is pretty much the reason that Effective Altruism exists. This makes sense - if you want to know whether you should be donating your money to malaria charities as opposed to UNICEF, it seems really useful to figure out what the limitations of your research into Malaria charities are, or exactly how confident you are that the donation of insecticidal nets will result in fewer people dying. Similarly, the sorts of people who want to do the most good effectively by honestly evaluating charities are also likely to be the sorts of people who appreciate extreme honesty in general.

But I think this cultural norm in Effective Altruism may have become ubiquitous to the extent that it could plausibly come to harm Effective Altruists as the movement becomes bigger. Let’s take Benjamin Todd’s claim that all (or many) news articles ought to come with a set of falsifiable and quantifiable predictions. Suppose that an EA Magazine is funded, and every wishy-washy sounding prediction that someone makes must be followed by an explicit forecast. The problem here may be that writing interesting and useful articles about what is likely to happen in the future does not necessarily require the same skill-set as making quantifiable forecasts. There are plenty of writers who I read (and whose views I take into account when making explicit forecasts) who I doubt would actually be good forecasters themselves. Perhaps they’re not particularly inclined towards data analysis or putting numbers on things - this does not mean that their insights aren’t helpful, and putting some forecasting track-record next to their byline in the magazine seems like it could do more harm than good.

When I read some news article about who is likely to replace Boris Johnson as Conservative leader, I don’t particularly care whether the author has a superb forecasting track record, I just care about whether they’re writing things that I didn’t know already, providing some level of original insight, and/or reminding me of salient factors that I may have underestimated or forgotten about.

Moving onto Galef’s example, it would certainly be nice if it were the case that people judged others negatively if they were obviously overconfident in their predictions. When I first read her excellent book The Scout Mindset, I thought that she may refer to research that showed that there is a negative correlation between someone saying that they were certain that they would be successful in some task, and how competent they were perceived to be by others. In fact, the research not only doesn’t show a negative correlation between claims of certainty and observed confidence, but shows a positive correlation. As can be seen above, there is a significant (although small) positive correlation between someone expressing certainty and how competent they were perceived to be by others, and further insignificant (but positive) correlations between other forms of epistemic confidence and perceived competence. That being said, it is reassuring that all forms of social confidence seem to be more strongly correlated with perceived competence than do forms of epistemic confidence.

I also have a worry that extreme honesty has emerged as something that you ought to do because it is good in and of itself, rather than because it results in good things. It’s true that there are advantages to having a norm of extreme honesty in a social movement, but I think there should be further discussion about the situations in which extreme honesty is likely to undermine your effectiveness rather than strengthen it. There is a reason that most founders of start-ups don’t tell potential hires that the chance of success is only around 3%. There is a reason that most newspaper columnists don’t write explicit numerical forecasts which allow bad-faith actors to pick out their worst forecasts to demonstrate their incompetence. There is a reason that most highly effective institutions, companies, and people don’t engage in extreme honesty all the time.

An issue is that focusing on extreme honestly makes you liable to focus on things which can be thus quantified. That said I'm also not sure the big issue with the news as such is that they don't make empirically verifiable claims as much as either they lie outright or they write to the converted with a clear bias.

Strong agree. I'd go further and say most of my favourite writers might be actively less accurate, even in purely numerical terms, if they adopted 'extreme honesty'. A lot of people think through writing (including myself) and writing columns or posts about an issue is one of the best ways to figure out what you know about it and what you don't, and to build a detailed mental map of the issue. But there are many topics that (a) most people aren't confident enough to make quantifiable predictions about but which (b) feed into other topics, such that knowledge of the 'unquantifiable'* topic can lead to better quantifiable predictions on other topics. If you prevent people from writing on these 'unquantifiable' topics, they won't be able to develop good knowledge about them, and in turn will make worse predictions when dealing with related questions.

To take a pretty narrow example from my own thinking, my understanding of nationalist / unionist dynamics at Queen's University Belfast would definitely not be amenable to quantification: some people have tried stuff like 'how many unionists vs nationalists would respond in surveys that they feel uncomfortable expressing their political beliefs among peers', but there are just way too many confounding factors there. So if I were holding to 'extreme honesty', I'd avoid writing or speaking about that topic. But that would make it incredibly difficult for me to get clear about what I know, what I don't know, and what I believe. And since my thinking about this topic quite heavily informs my thinking about Northern Irish politics,** extreme honesty would make me actively less accurate when I come to make numerical predictions about Assembly elections or whatever.

I think this applies even more to actual good writers than it does to just some schmuck like me. The most interesting people almost always find certain things uniquely salient, even if they can't quantify what this salience means: think about how Tyler Cowen often asks follow-up questions in interviews that seem odd or even bizarre at first blush, only to lead to incredibly helpful responses. Suppressing this individual interest in things that people just happen to find salient, only because they can't quantify their thinking, would lead to less well-formed reasoning about topics further downstream.

* I don't mean to imply that these are topics where you simply CANNOT make quantifiable predictions, only that most people would not.

** QUB is a pretty representative sample of relatively young and relatively affluent people from NI, and there's good numerical reason for thinking this group will have outsized influence on election results going forward.