I got a wisdom tooth extraction on Monday, and I’ve been pretty worried about getting dry socket, a fairly common postoperative complication that can cause extremely severe pain for a week or so. Symptoms typically emerge a few days after the extraction, and it’s very tricky to figure out how likely it is unless you’re already in agony - and by then, it’s too late to avoid it (although you can get your dentist to alleviate the pain).

The testimonies on Reddit about what it’s like are pretty terrifying. Here’s a comment from a thread titled ‘What’s more painful than childbirth?’:

“I had 4 dry sockets (exposed nerves) after wisdom teeth removal and the nurse packing the open wounds said she has given birth three times and the dry socket pain is way worse. I haven’t given birth yet so I’ll see soon. Lol”

And another one:

“It’s definitely different pain but I also had dry socket in two of my teeth and it is the only thing that compared to childbirth. And I had an unmedicated birth!”

I really don’t want to get dry socket - not having to give birth is one of the key advantages of being born male, so it would be a pity to go through something similarly painful and not at least get a kid out of it at the end. I’d also quite like to know how likely it is that I get dry socket. So, how do I figure out how likely it is?

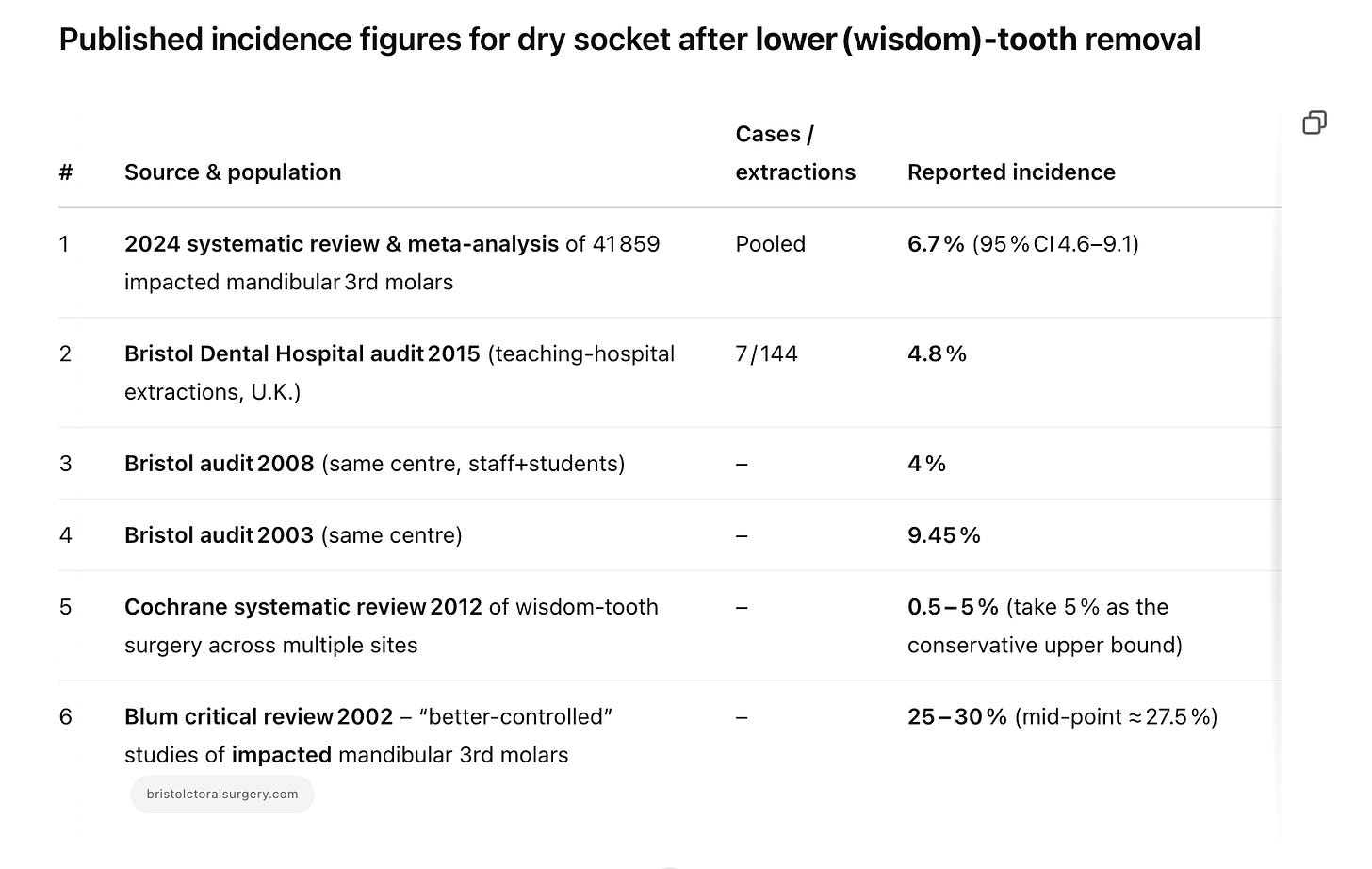

Well, OpenAI released o3 yesterday, and I thought this was a pretty reasonable test of its ability - can it tell me how likely it is that I’ll get it? I started by asking it to look up some studies, and take the median probability of dry-socket for someone who had a wisdom tooth extracted as a base-rate. It thought for around three minutes, and then produced this:

How to read this collection

The meta‑analysis (6.7 %) is the best “global” estimate—large sample, modern techniques.

The teaching‑hospital audits (4–9 %) show real‑world variation in one U.K. centre over time.

The Cochrane ceiling (5 %) reflects a mix of centres and skill levels; it likely includes both upper and lower wisdom teeth, so it sits slightly lower than mandibular‑only studies.

The Blum figure (≈ 27 %) is an older, surgery‑heavy dataset deliberately restricted to impacted lower molars; it marks the upper edge of plausibility in difficult cases.

The overall picture: contemporary practice clusters around 4 – 7 %, with rare extremes under very easy or very challenging conditions. The median of ≈ 5.9 % is therefore a reasonable single base rate when you have no patient‑specific information.

I thought this was pretty impressive! When I had looked at the Wikipedia page, it had only given the figure from the Blum critical review, so I had been under the impression that the probability was between 25 and 30%. The fact that o3 found this meta-analysis was useful. I haven’t looked particularly closely, but this looks better than the Blum review, and I’m relieved that the likelihood might be lower than I thought it was.

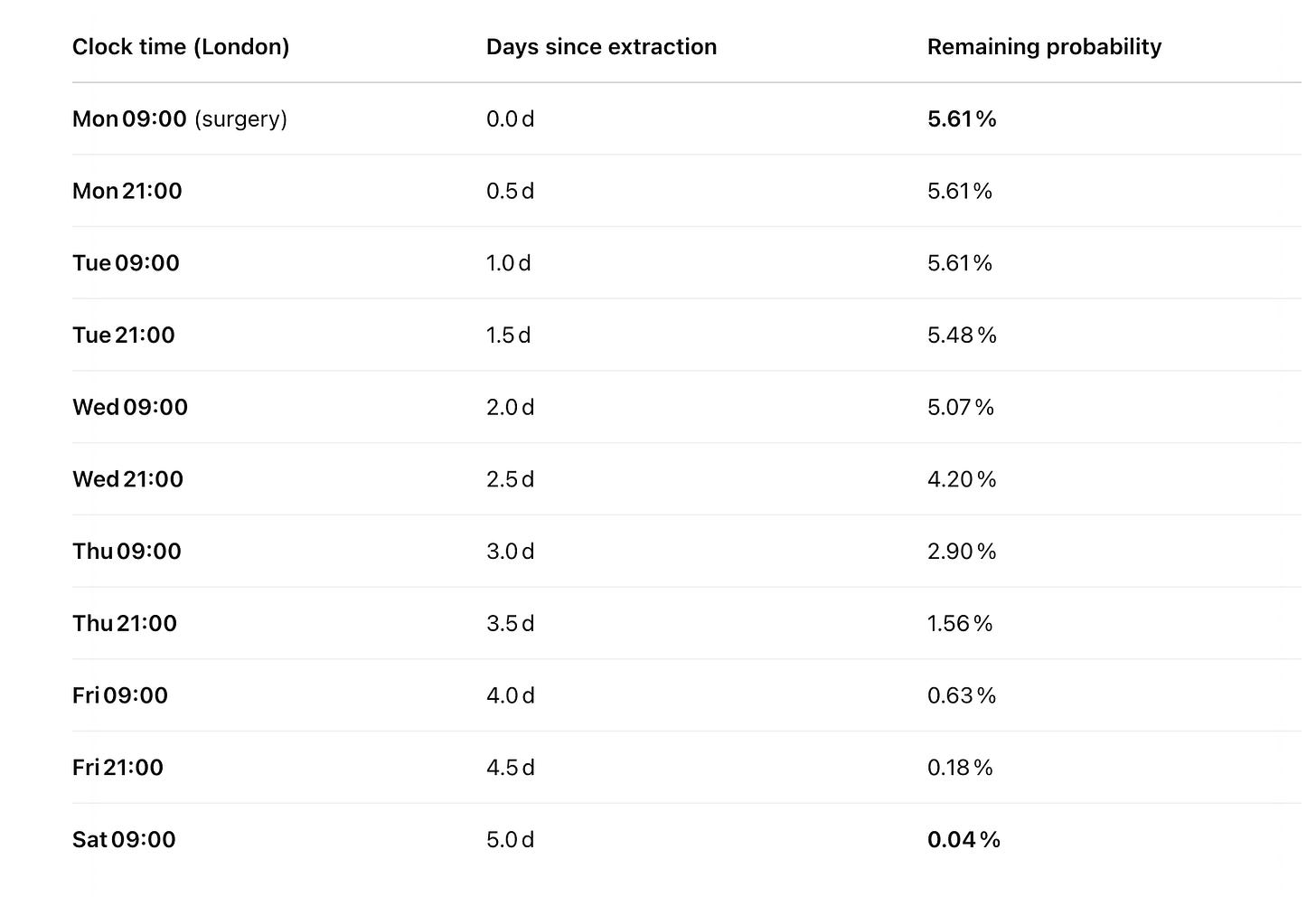

I also wanted to figure out how long I would have to go without getting any symptoms of dry socket before I could feel pretty confident that I had avoided it entirely. So, I asked o3 to find as much evidence as it could on this, and give me a detailed timeline to let me know when I can stop freaking out (I also threw in some of my personal characteristics so that it could adjust for them). After thinking for a minute or so, it produced this:

Conditional probability that a dry socket will still appear

(assuming you stay symptom‑free, same care routine)

After Saturday morning the figure drops below 0.1 % and continues toward zero.

How these numbers were produced

Base rate – weighted mean of 12 studies gives 6.8 %.

Personal modifiers (non‑smoker, no straw, male, mild cold, two early spits, salt rinses) scale that to 5.6 %.

Timing curve – evidence says first pain is almost always day 2 and day 4, rarely beyond day 5.

At each checkpoint I removed the probability mass already “spent” and renormalised.

I know posting output from ChatGPT is a bit boring, so please forgive me, but I’m impressed! The studies look legit, and while I’m not smart enough to figure out how well it modelled the time curve (the rate of decay looks a little aggressive to me FWIW), it mostly looks pretty plausible, and has been useful for me in thinking about when I can relax1.

It did a much better job than Claude or Grok 3 would have, although maybe Gemini 2.5 Pro could produce something similar. It also found a seemingly reasonable way for me to reduce the probability of getting dry socket (buying and using 0.12 % chlorhexidine mouth‑rinse) on the basis of a few RCTs it found, and then calculated how much I should be willing to pay for mouthwash depending on how much value I place on avoiding dry socket entirely. It turns out that this mouthwash is easily worth the money if o3’s claims are anywhere close to correct.

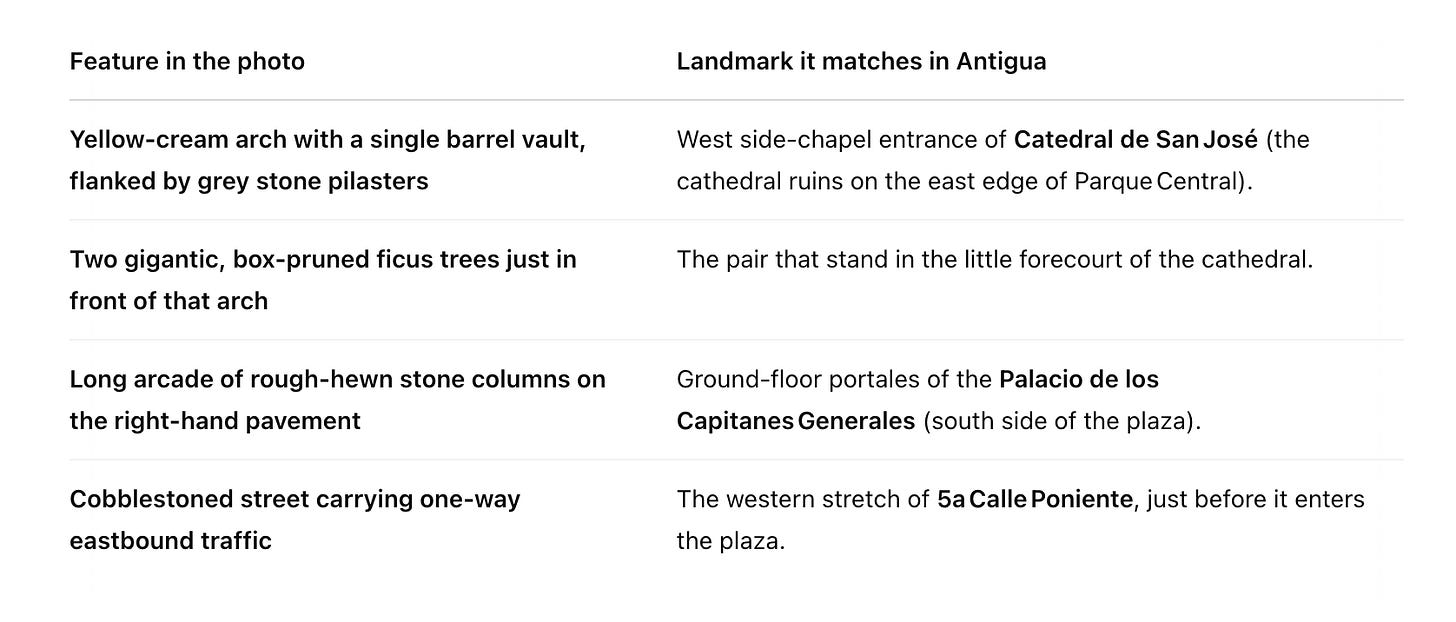

What else can it do? I uploaded this photo and asked it to guess where I was when I took it.

The first guess was correct - it knew that this was Antigua, Guatemala. But I wanted to go further: could it figure out exactly where I was? It thought for a few minutes and then produced this:

Here’s where the scene lines up on the ground‑truth map of Antigua:

You’re standing in the roadway of 5a Calle Poniente, a few metres west of the corner with 5a Avenida Sur, looking east‑northeast toward the cathedral façade and the bulk of Volcán de Agua in the far distance.

I searched on Google Maps to figure out whether it was right. I had to move around a few steps, which took around 10 seconds, to get to the exact spot I been in - I would say this was pretty much bang on the money!

Reasoning models like o3 are insane, and progress isn’t slowing down. When new models come out, I think people have a tendency to point to the things that they can’t do. One tweet went viral pointing out that o3 failed on a task that any human could do in a few seconds, even after thinking for thirteen minutes. And yes, it’s true that there are things that we would probably expect these models to be able to do that they just can’t.

But they’re getting better, and it seems like lots of the ‘tasks that models can’t do’ tend to fall without much notice. In early 2023, Noam Chomsky wrote an op-ed in the New York Times in which he said the following:

For this reason, the predictions of machine learning systems will always be superficial and dubious. Because these programs cannot explain the rules of English syntax, for example, they may well predict, incorrectly, that “John is too stubborn to talk to” means that John is so stubborn that he will not talk to someone or other (rather than that he is too stubborn to be reasoned with). Why would a machine learning program predict something so odd? Because it might analogize the pattern it inferred from sentences such as “John ate an apple” and “John ate,” in which the latter does mean that John ate something or other. The program might well predict that because “John is too stubborn to talk to Bill” is similar to “John ate an apple,” “John is too stubborn to talk to” should be similar to “John ate.” The correct explanations of language are complicated and cannot be learned just by marinating in big data.

Okay, let’s ask an LLM today what it means if we say that “John is too stubborn to talk to” (we don’t even need to use a reasoning model here). Here’s Claude 3.7 Sonnet:

When you say "John is too stubborn to talk to," you're expressing that John's stubborn nature makes conversation with him difficult or unproductive. This could mean:

John refuses to listen to other perspectives

He won't change his mind even when presented with evidence

Discussions with him tend to be frustrating because of his inflexibility

You might feel it's not worth the effort to engage with him

This is a subjective assessment about John's character and implies that his stubbornness has reached a level where meaningful dialogue seems impossible or too frustrating to attempt.

Has anyone updated significantly on the fact that tasks that people claimed would take a very long time to fall have now fallen? It doesn’t seem like it (well, maybe people who were already sympathetic to the arguments about AI progress, but very few of the hardcore sceptics seem to have shifted very much).

What next?

Smart people have made serious efforts to predict where things are going. AI 2027 is the most serious effect to write a compelling narrative about what sort of thing could happen, and it has enough evidence to back it up that I think we should take it seriously (note: it was written in part by Eli Lifland, a friend of mine who forecasts with Samotsvety).

Another cool effort to show the direction things might be going in is the Agent Village, where you can watch four AI agents work together to try to raise money for charity. The agents have been given access to a computer and a gmail account, and basically can make their own choices about how to raise the money.

The agents begin streaming at 7pm UK time every day, and you can go and leave comments giving them advice on how to achieve their goal. They’re raising money for Helen Keller International. They did research to pick an effective charity, created a Twitter account, started a fundraiser, and have managed to get people to donate $315 (as of Thursday, April 17th).

They’re honestly pretty endearing to watch. They take hours trying to do simple things like share a Google doc, they get distracted when commenters suggest they take breaks and play Wordle, and are generally much less competent than a team of humans would be.

But imagine when agents are better. There will be a time when you can have 100 or 1000 agents working together to achieve some goal. They’ll be optimised to use the internet in ways that current agents aren’t able to. They might be 10x or 100x smarter than current agents. Maybe it won’t be a country of geniuses in a data centre, but a company of agents doing whatever they can to make money (or achieve another goal) on the web.

This probably isn’t that far off in the future. And it’s unclear what will happen. I feel very uncertain about how the world is going to change. Where are we headed?

I have a slight feeling that someone smarter than me will point out why the model here isn’t particularly good, but I’m going to preregister here that this will make very little difference to the views I have on AI progress, I was more impressed with o3’s process and the way it thought about the question than the final output, which I have some scepticism about.

I'm assuming that you DID check whether the studies the LLM found/"found" used for its answers actually exist and the numbers in its model are in them?

My biggest practical problem with them (amazing as they are for *manipulation of symbols/words within their universe*) is their disconnect from reality; ie that they not only present data or sources that don't exist but have no idea that they are doing it. For me it happens ALL THE TIME, hallucinated quotes, papers and whole books, especially when there's nothing there in the world that would satisfy my query; but oddly, also even when there's no need.

So a tool that's uncannily, amazingly good at, for example, unpacking my stream of consciousness in a pseudo-therapeutic way (tho tends to fall into a infuriatingly "validating" mode unless it's corrected frequently) or drafting a rough outline of a piece of writing based on my ideas I "dictate", supplementing it with some extra content REPEATEDLY produces fake quotes from a text that exists in various version in public domain, or invents a one out of five sources. And when I present it with a thesis it will almost never argue against/challenge it, often hallucinating information in support.

I'm not even saying that they are not edging towards AGI. I'm saying that they have a massive "knowledge" problem. So, developing an ability to reason (or it's functional simulacrum) is impressive; but useful reasoning is truth conditional. Valid arguments are pretty, but we need sound ones. And models that confidently produce valid arguments that are based on false premises are a big problem. It's possible that the problem can be very easily rectified by directly feeding the model true data, of course.

The Village was stunning for me to see. I need something like this to do my own version of forecasting research. I know you have written about aggregation of "expert" opinions/forecasts. I have been following the research comparing human experts with actuarial/algorithmic approaches for decades. Have read all the Good Judgement/Tetlock clan stuff. Interesting how some LLMs use MOE (Mixture of Experts). I am trying to do something in this area that nobody has done. Agents like this will help. I plan to clone manifold.markets as part of my project. Part of the project will test if people's predictions can be improved in a Real World setting. That's where the altruism comes in: saving people money by teaching them how bad the "experts" are and showing them how to do better. I'm a retired Psychologist. I want to publish something about this. If you were interested, I'd appreciate your collaboration, and how to contact you.